One of the constraints often missed out while developing Retrieval Augmented Generation (RAG) based Question Answering systems is Memory (RAM). Quite simply, higher memory requirement equals higher cost.

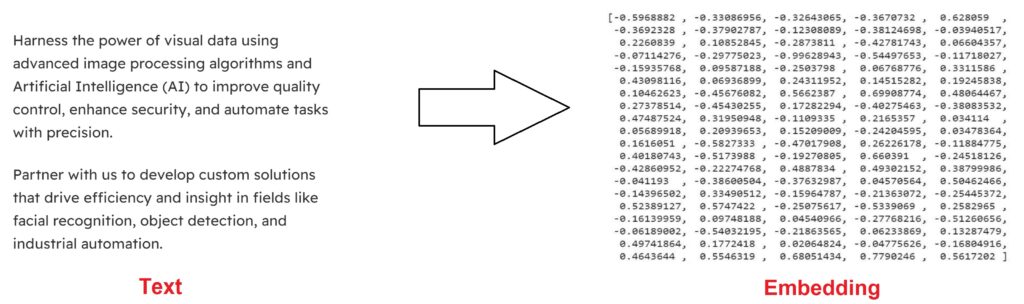

The basic premise for RAG based systems is Vector Embeddings. Embeddings are a complex concept, but simply put they are numerical representation of piece of text (generally a list of floating-point numbers). Converting text to embeddings helps us to determine similarity between texts, they can be used to fine tune or even build a model from scratch.

We recently came across a requirement where an online library needed to implement semantic search and Q&A over 500K (0.5 million) scientific research papers (documents). During initial estimates, we came up with the following memory requirements for the embeddings alone:

Number of documents = 500000

Approximate embeddings generated per document = 10

Total embeddings generated = 5000000

Dimension of the vector embedding = 1536 (assuming OpenAI’s text-embedding-ada-002, this will vary based on model used)

Size of each floating-point number = 4 bytes

Memory footprint per embedding = 1536 * 4 = 6144 bytes

Memory footprint for 5000000 embeddings = Total embeddings generated * Memory footprint per embedding = 5000000 * 6144 = 30,72,00,00,000 bytes or 32.7 GB!

If you look at different vector databases in the market, they recommend estimating 1.5x (Qdrant) to 2x (Weaviate) times the actual embedding memory capacity, which would take our memory requirement to ~64GB! The extra requirement is for metadata (indexes, data, etc.) as well as for temporary segments needed for optimization. If the budget allows you, that is the recommended configuration to go for, if not, please read on.

(Note that vector databases allow storing a percentage of vectors on disk which is significantly cheaper but that results in significant performance degradation as well)

We looked at some ways to reduce this footprint without losing too much accuracy and these were our broad findings:

1. Reduce the vector embedding dimension

We can reduce the embedding demisions by replacing OpenAI’s text-embedding-ada-002 with a model with smaller dimensions. For example, we could replace it with all-MiniLM-L6-v2 which has a dimension of 384, which would reduce the memory requirement by 4X, but there could be a reduction in accuracy. We need to consider the max tokens per embedding as well, sometimes smaller embedding models have a lower max token setting which would increase the number of embeddings needed and hence offset the memory savings leading to no benefits.

2. Quantization

It is a method to achieve efficient storage of the high dimensional vectors. Quantization compresses the vectors while preserving the relative original distances. At the time of writing this article, there are 3 ways to achieve quantization:

Scalar Quantization

Converts the floating-point vectors to unsigned int, reducing each vector to just 8 bits. This not only results in memory reduction by a factor of 4, but also speeds up the search due to fast vector comparison. The tradeoff of this scalar conversion is a slight decrease in search accuracy, but it is less significant for high dimensional vectors. It is recommended as the default quantization method.

Binary Quantization

As the name suggests, this method converts floating point vectors to single bit, reducing memory footprint by a factor of 32. It is the fastest quantization method but requires a good understanding of the data distribution and additionally may require fine tuning using data augmentation.

Product Quantization

Compresses the vectors by converting to 8-bit integers and by dividing them into chunks. These chunks are then clustered so that the common elements are shared thereby reducing memory footprint. It has the largest impact on memory, possibly reducing by a factor of 64, but with significant loss in accuracy and search speed.

The correct choice of quantization type should be made based on the data, available memory, and required indexing/search speed.

Coming back to our title ‘Who ate my cheese?’ – make sure to keep an eye on your cheese portions – after all, nobody wants a mouse-sized memory meltdown!

If you are looking for a custom implementation of AI Search and Q&A over your company data or add AI functionalities to your existing system, contact us or email me at elizabeth@beaconcross.com